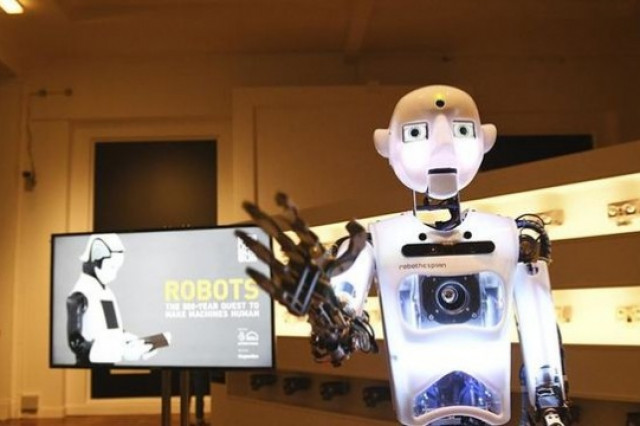

The three laws of robotics are a set of rules described by American science fiction writer Isaac Asimov in their 1942 account "Runaround" -received in the 1950s compilation of stories "I, Robot"- and present in much of their work, aimed at delimiting the basic behaviour of robots in their interactions with humans and other robots.

Robotics was one of Isaac Asimov's favourite subjects and is reflected in much of their prolific work. Throughout this, in repeated stories, allusion is made to the "Three Laws of Robotics".

These are the following:

A robot will not harm a human being or, by inaction, allow a human being to suffer harm. As you can see, it is a basic protection of the human being. The robot must put the integrity (physical or psychological) of the person before all else

A robot must obey the orders given by humans, unless these orders conflict with the first law. The Second Law of Robotics also leaves no room for doubt; The robot must ALWAYS obey human beings (which leaves him in a total position of dependence on people) unless the order he receives is to do or allow harm to a human

A robot must protect its own existence to the extent that this protection does not conflict with the first or second law. Finally, the Third Law of Robotics supposes the absolute surrender of the robots to the humans. Robots have a duty to protect themselves, but they must be submissive to humans, to the point of being obliged to obey if they are ordered to self-destruct

The three laws contained in Asimov's work assume the total surrender of robots to humans, and their complete acceptance of their role as slaves.

Subsequently, in 1985, Asimov introduced in their book Robots and Empire, a fourth rule, called the Zero Law of Robotics, with the following statement: "A robot cannot cause harm to mankind or, by inaction, allow humanity to suffer harm".

The three laws contained in Asimov's work assume the total surrender of robots to humans, and their complete acceptance of their role as slaves

By the very content of this Law, the three previous ones would be subordinated to this one. However, as would be seen in Asimov's later work, the application of the Law Zero would pose enormous problems, because of the difficulty in determining what humanity is and what is considered most favourable to it.

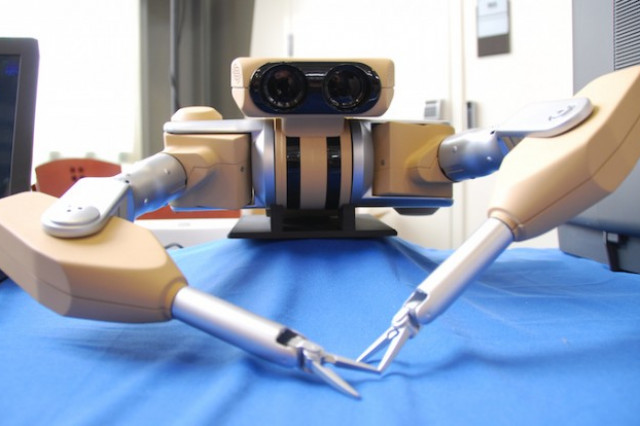

Traditionally, for their precision and pioneering character, Asimov’s three laws of robotics have been considered as the reference when determining the basic behaviour that a robot should have. However in the future, and when the robots exhibit an intelligence similar to that of an adult human being, will it be possible to delimit their actions with such a precise set of rules?

Throughout the work of Asimov very interesting questions arise as to the way in which the robots would apply the three laws; For example, how would they act in the case of having to decide between the lives of several humans? How would they determine what "harm" is or the different levels of "damage" that a human being can receive? Is psychological or physical damage more serious?

The laws of robotics proposed by Asimov are imaginative and practical within the universe that the fantastic writer recreated. However, they have a shortcoming: no matter how visionary Asimov (and, yes, he was!) they are children of the scientific and technological knowledge of their time. And since then, things have changed, and considerably.

And what about beyond the work of Asimov? Are there any updated criteria for how robots should behave? Not yet, or at least not formally. In 2011, the Research Council for Engineering and Physical Sciences (EPSRC) and the Research Council for Arts and Humanities (AHRC) UK published a "set of five ethical principles for designers, builders and users of robots in the real world, "but it is a set of rules rather designed for robot designers, and not so much for robots themselves.

At this point, let us return to the original question. When robots are prepared to the intelligence of an adult human being, will it be possible to delimit their behaviour according to a set of rules? To answer this question we should look at the best reference we have: human beings themselves.

Human beings are governed by a series of precise rules (laws) that determine what they can or cannot do, and the consequences in case of behaving contrary to them. However, these rules do not govern all the people’s possible behaviours in their day to day life; only condition the attitudes of people in certain especially conflictive situations; in the rest of their functioning, the human being is "happily" ignorant of the laws, and what they do is to function according to basic ethical criteria that are not learned by studying a law, but rather in the family or school.

Why should robots be different? It is not too difficult to imagine that, within a few years and given the technical capabilities of a robot, a sufficiently prepared one would be able to learn all the existing norms in the legal system of the country in which it is and try to act accordingly. Is this viable? In my opinion no, the legal system of any country is so complex that the work of thousands of legal professionals (judges, lawyers...) would be required to try to discern the possible contradictions which occur, and even so the permutations are infinite. Probably our robot friend would be completely blocked if he tried.

It is not too difficult to imagine that, within a few years and given the technical capabilities of a robot, a sufficiently prepared one would be able to learn all the existing norms in the legal system of the country in which it is and try to act accordingly

But what if the laws applicable to robots were reduced to a body of basic rules, such as those proposed by Asimov? We would encounter two problems:

First, the technological problem. The artificial intelligence that is reflected in the work of Asimov is much more rigid than the actual artificial intelligence that is being prepared today, based on what is known as "machine learning". Automatic learning, often and as we have already seen in Robotsia, in multiple examples, can lead to unexpected results, and would make it really difficult (if not impossible) to introduce a set of basic rules that would act as "firewalls" in sufficiently prepared artificial intelligence, and even if it were achieved, the AI would probably sooner or later find out how to bypass this limitation

Secondly, we would not solve the fundamental problem, that of freedom. Humans are fundamentally free. They do not follow the laws (not even the basic ethical laws, such as not killing or stealing) only because they know that otherwise they would suffer the negative consequences, but because they decide to do so

Of course, this does not mean that laws are not necessary or should not have clear objectives, but all human beings are free or not to abide by them and accept the consequences of not doing so; none has a chip in their head that prevents them from infringing a law; this would be regarded by people as something aberrant, as an inadmissible limitation of freedom.

What we should attempt, and somewhat in line with what the philosopher Nick Bostrom claimed, is that robots adopt ethics similar to human ethics, and that they do so voluntarily

Why then do we consider that what would be intolerable to humans is good for robots? If history has taught us something, it is that sooner or later the oppressed rebel against those who are tyrannizing them. It would not be a good idea to turn such intelligent robots into slaves, and even less so when everything seems to point to that, over time, they will become smarter than us...

What then would be the solution? It is not that we should go to the opposite extreme and that, not being able to implement it rigidly, we forget any ethical question regarding robots. Rather, what we should attempt, and somewhat in line with what the philosopher Nick Bostrom claimed, is that robots adopt ethics similar to human ethics, and that they do so voluntarily.

Given that it is now when robots are learning as if they are children, is not this the best time to start doing this?

(*) Article published in Robotsia and that the author has authorized its publication in TEJ